Hi, I'm Michael.

I'm studying computer science at UC Berkeley. Last summer I interned

at Amazon as an software development engineer on the

Alexa

Conversational Shopping team. I'm also doing due diligence and

research on the AI hardware accelerator industry at

Riot Ventures. You can

catch me live sometimes at

ugly.video/michael.

Past Work

April 2021

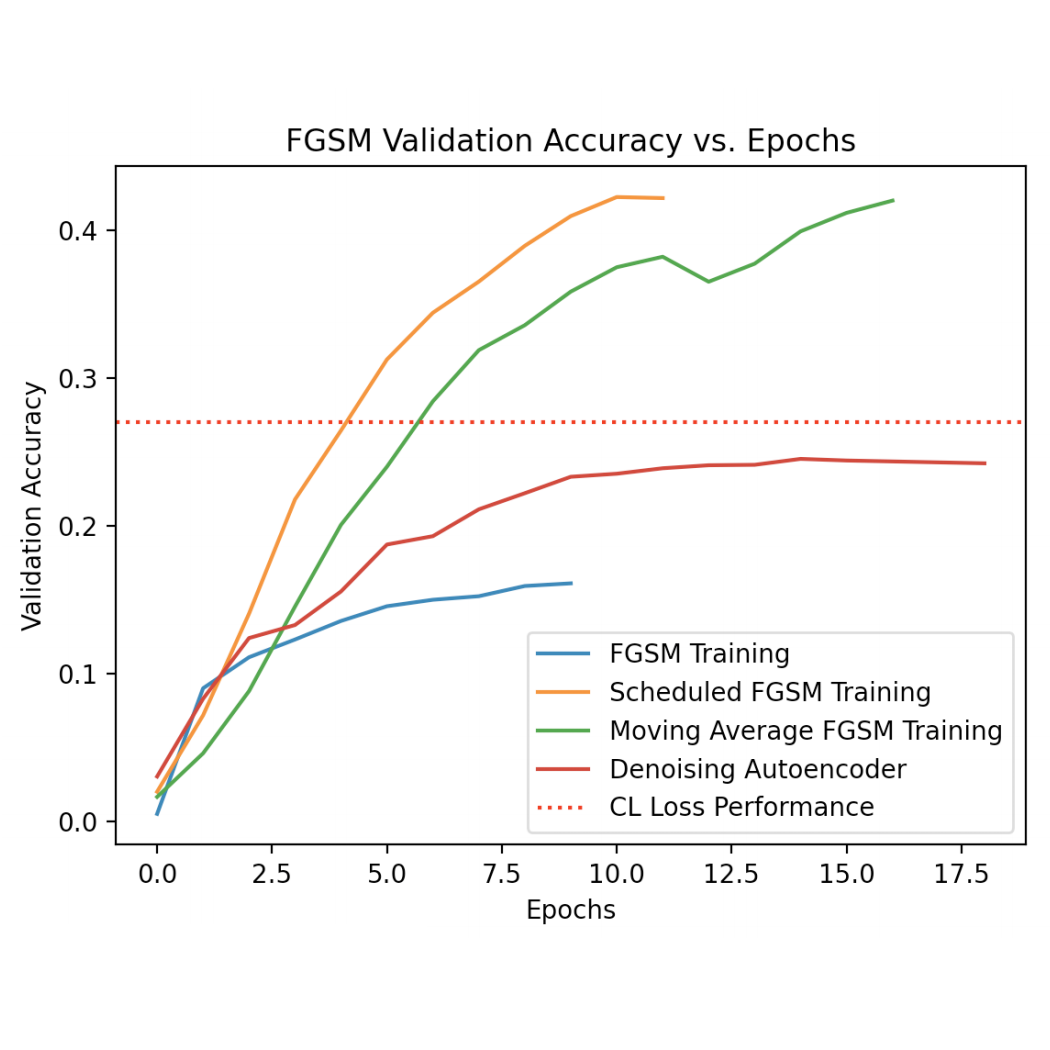

Improving Free Adversarial Training using Scheduling,

Augmentations, and Denoising

Implemented scheduling of inner loop constraints to optimize

adversarial training. Used data augmentation and weighted

constrastive loss to improve classifier performance against

geometric image perturbations.

February 2021

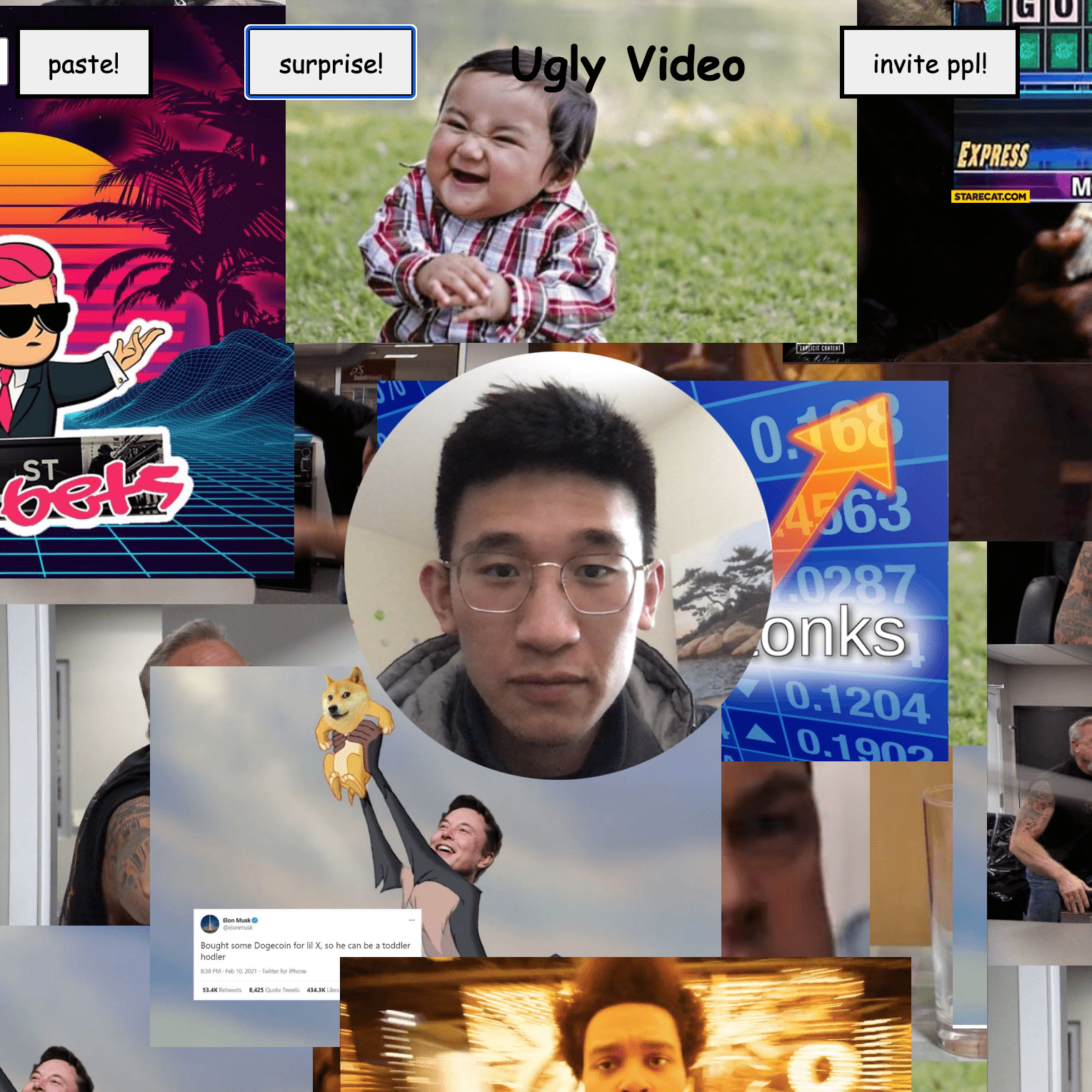

ugly.video - Less serious video conferencing

Used WebRTC to build a video conferencing tool for the

browser. Implemented whiteboard and image sharing features.

Custom UI/UX design to minimize friction in creating new

meetings and inviting people.

December 2020

Sentiment analysis with Ludwig's deep learning toolkit

Compared three different deep learning architechtures

(parallel-CNN, Bi-LSTM, BERT) on the Stanford Sentiment

Treebank dataset. Find the source code

August 2020

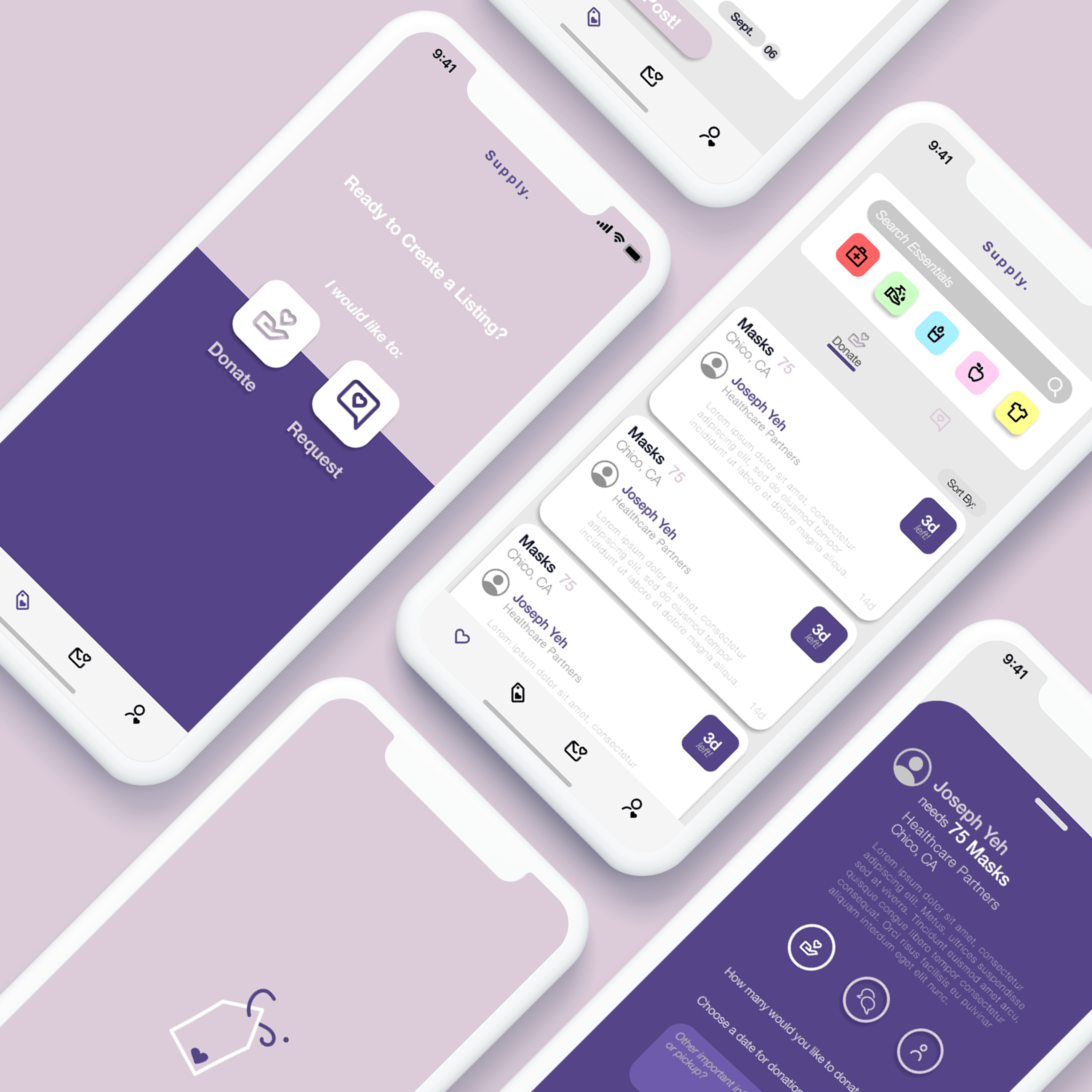

Supply: Enabling community sharing of essential items

Users can request and donate essential items to their local

community through the Supply iOS app. Designed in

. Made with Swift and

.

June 2020

StatFinder: Parse the internet for relevant statistics

Users input the URL of a webpage. The web app queries a custom

API that parses the page for relevant statistics and displays

them in order of importance. Find the source code

February 2020

Crowd Insights: Real-time computer vision for analyzing crowds

Identify clusters and lines of people in real time using

Pytorch, Flask, and Google Cloud Compute. Visualize human

traffic behavior over time to make more informed business

decisions.

October 2019

OskiBot: UC Berkeley course recommendation chatbot

Students input which graduation requirements they want to

fulfill and OskiBot responds with a list of it's top five

recommended courses that match the user's requirements. Find

the source code

May 2018

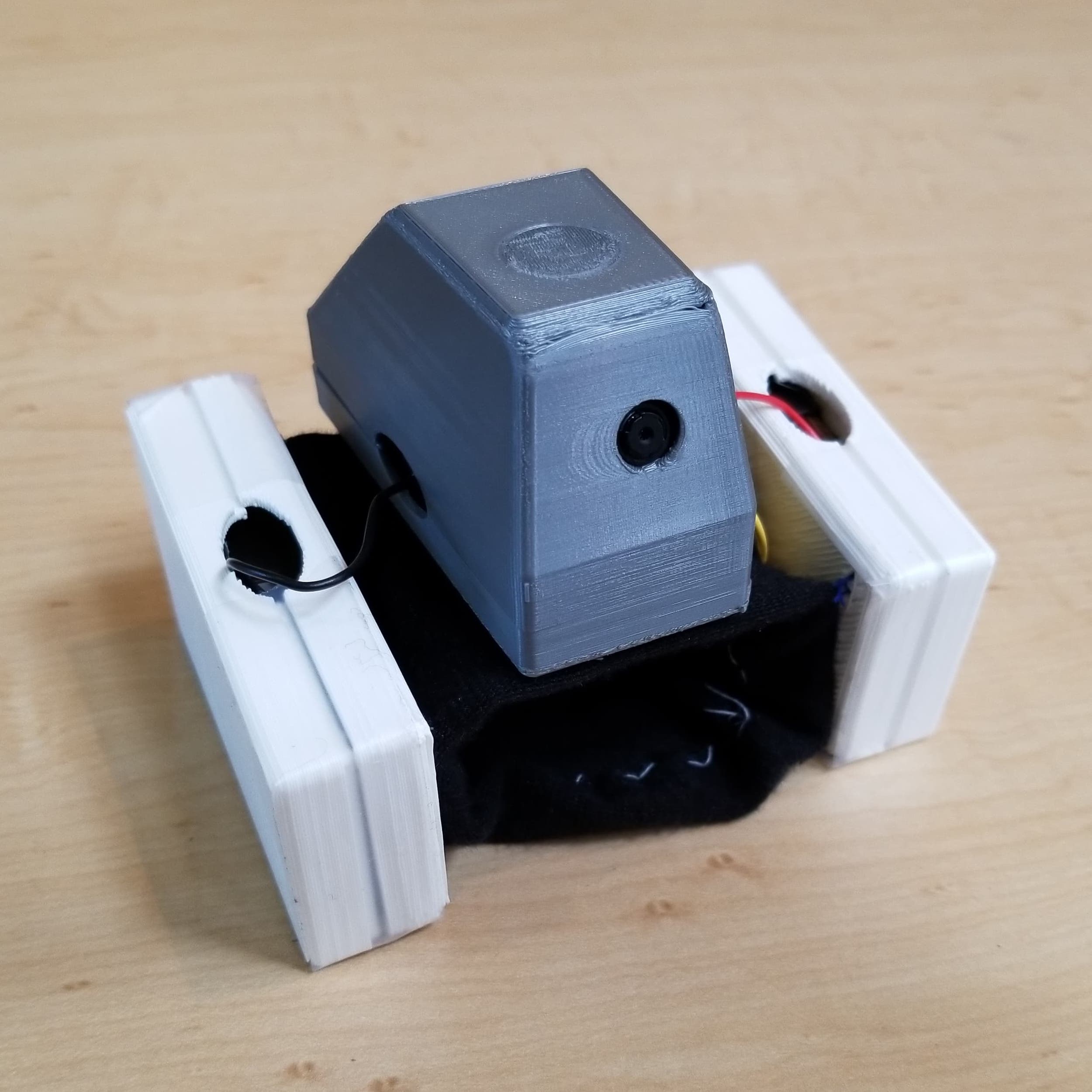

BlindSight: Assistive wristband for the visually impaired

Camera module, haptic motors, and object recognition system

built into a wristband. Tells the user what object they are

pointing to and helps them locate the object through haptic

feedback.